Nvidia’s Standing and Potential

Nvidia Corporation (NASDAQ:NVDA) (NEOE:NVDA:CA) has been a lightning rod for investors, grappling with the dynamic interplay between its AI dominance and myriad threats to its hegemony, a significant theme extending through 2023 and into 2024.

In this deep-dive analysis, we weigh these forces and present striking numbers and the latest news. And finally, we provide valuation scenarios, offering investors a revelatory peek into the current risk/reward profile for potential investment in Nvidia shares over the next 3 years.

Our conclusion underscores the formidable challenge facing Nvidia’s competitors over the next 1-2 years, leaving the company as the unassailable leader in the rapidly expanding accelerated computing market. We contend that this is not accurately priced into the current valuation of shares, potentially propelling further significant share price appreciation through 2024, especially as analysts revise their earnings estimates. However, potential concerns lurk, including the specter of Chinese military intervention in Taiwan or revived U.S. restrictions on Chinese chip exports, and merit close monitoring.

The Unlikelihood of Competitive Upheaval

Nvidia has been charting a course for the era of accelerated computing for well over a decade. The 2010 GTC (GPU Technology Conference) revolved around GPUs for general-purpose computing, particularly supercomputers. A look back at a slide from the 2010 presentation by Ian Buck, then Senior Director of GPU Computing Software and now General Manager of Nvidia’s Hyperscale and HPC Computing Business, reveals the prescient recognition that GPUs, not CPUs, would underpin the future of computing, with a burgeoning need for accelerated computing. Four years later, big data analytics and machine learning took center stage at the 2014 GTC, as emphasized by CEO Jensen Huang in his keynote address.

With these prophetic forecasts, Nvidia embarked early on building out its GPU portfolio for accelerated computing, securing a substantial first-mover advantage. The launch of the Ampere and Hopper GPU microarchitectures in recent years—Ampere in May 2020 and Hopper in March 2022—yielded the world’s most powerful A100, H100, and H200 GPUs, which seized a lion’s share of the burgeoning data center GPU market in 2023 fueled by emergent AI and ML initiatives, boasting a ~90% market share for Nvidia. Buoyed by its GPUs, Nvidia ventured into the networking realm, amassing a multibillion-dollar networking business in 2023.

In addition to cutting-edge GPUs and networking solutions (the hardware layer), which offer unrivaled performance for large language model training and interference, Nvidia wields another critical competitive edge—CUDA (Compute Unified Device Architecture), its proprietary programming model for harnessing GPUs (the software layer).

To leverage Nvidia GPUs’ parallel processing prowess, developers require access through a GPU programming platform. Doing so through general, open models like OpenCL is a more laborious, developer-intensive process than utilizing CUDA, which furnishes low-level hardware access and spares developers intricate details through simple APIs—drastically simplifying the use of Nvidia’s GPUs for accelerated computing tasks. Nvidia has heavily invested in crafting specific CUDA libraries for distinct tasks to enhance the developer experience.

Initial released in 2007 (16 years ago!), CUDA is now central to the AI software ecosystem, akin to A100, H100, and H200 GPUs in the hardware ecosystem. Most academic papers on AI, not to mention enterprises harnessing AI, utilize CUDA acceleration. Even if rivals manage to develop viable GPU alternatives, crafting a software ecosystem akin to CUDA’s could span several years. When making investment decisions pertaining to AI infrastructure, CFOs and CTOs must mull over developer costs and level of support for the given hardware and software infrastructure, areas in which Nvidia shines. Although Nvidia GPUs may bear a hefty price tag, joining its ecosystem confers manifold cost advantages, ameliorating the total cost of operation—a compelling sales advantage in our estimation. For now, the world has rallied around the Nvidia ecosystem, making it improbable for corporations to jettison a well-proven solution.

Examining emerging competition, the primary independent adversary for Nvidia in the data center GPU market is Advanced Micro Devices, Inc. (AMD), whose MI300 product family began shipping in Q4 2023. The MI300X standalone accelerator and the MI300A accelerated processing unit represent the foremost real challengers to Nvidia’s AI dominance.

The hardware stack is accompanied by AMD’s open-source ROCm software (akin to CUDA), officially launched in 2016. ROCm has made gradual inroads among renowned deep learning frameworks like PyTorch or TensorFlow, potentially removing the primary impediment for AMD’s GPUs to gain substantial traction in the market. In 2021, PyTorch announced native AMD GPU integration, paving the way for CUDA-written code to run on AMD hardware.

“`html

The Dynamic Landscape of AI: Challenges and Opportunities for Nvidia

Nvidia has undeniably dominated the AI hardware market for over a decade, but the tides of change are beginning to stir. Over the past 15 years, CUDA, Nvidia’s parallel computing platform, has been refined to a state of near-perfection, making it the go-to choice for developers. Despite this, challengers are emerging.

Rise of the Challengers

AMD’s ROCm, touted as a potential CUDA alternative, is still deemed far from flawless, while CUDA maintains its stronghold. Other hardware-agnostic solutions like Triton from OpenAI and Intel’s oneAPI are also evolving. Although these alternative platforms show promise, their path to widespread adoption is riddled with bugs and deficiencies.

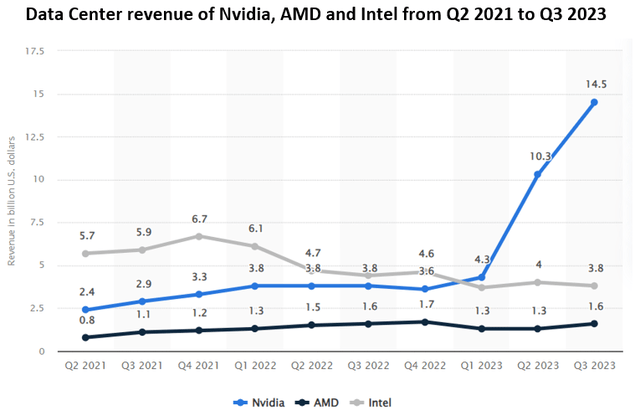

Lisa Su, AMD’s CEO, predicts strong demand for AMD’s solutions in 2024, yet Nvidia’s recent GPU-related revenues overshadow AMD’s projected figures by a significant margin. It’s evident that while potential alternatives are in the pipeline, Nvidia’s supremacy remains unchallenged for the moment.

The hyperscalers, such as Amazon, Microsoft, and Google, have been making strides in developing their own AI chips. While these chips are yet to power customer workloads at scale, they pose a looming threat to Nvidia’s dominance. Amazon’s Trainium and Inferentia chips, along with Microsoft’s Maia, are prime examples of this evolving landscape.

Amazon’s Dual Strategy

Amazon, a leading investor in AI startup Anthropic, recently strengthened its strategic partnership, signaling the reliability of its AI chip line. The introduction of Trainium2, aimed at cost-sensitive AWS customers, is poised to compete with Nvidia, albeit with considerations about software compatibility.

While Amazon endeavors to fortify its in-house capabilities, its collaboration with Nvidia at AWS re:Invent demonstrates a continued reliance on the latter to sustain competitiveness in the swiftly evolving AI space. This partnership underscores Nvidia’s continued dominance in the AI hardware arena.

Nvidia’s Conundrum in China

The restrictions on AI-related chip exports to China have posed a significant challenge for Nvidia. The company’s pivot to develop chips tailored for the Chinese market reflects its strategic response. However, the growing prominence of Huawei’s Ascend family of AI chips in China presents a formidable competition.

While Chinese tech giants seek to reduce reliance on Nvidia, the limitations on chip manufacturing pose a hurdle. Moreover, concerns over potential U.S. export restrictions on Nvidia’s chips add complexity to this dynamic market landscape. However, there is a growing sense that China is gradually catching up in producing advanced AI chips.

Evolution of the AI Landscape

Efforts to rival Nvidia’s hardware and software infrastructure for accelerated computing continue to burgeon. While some solutions have been in development for years, others are set for market testing in 2024. However, the prevailing sentiment indicates that these alternatives may not supplant Nvidia but rather coexist in the expanding AI market.

“`

Disruptive Forces in the Data Center Networking Market

The technology sector’s landscape is a place of constant upheaval, where movements are often as swift as they are significant. In the realm of data center networking solutions, a market traditionally dominated by Ethernet technology, a formidable challenger has emerged in the form of Nvidia. With a swift and shrewd display of market disruption, Nvidia has fundamentally altered the competitive equilibrium in data center networking, illustrating how quickly a market can be upended.

An Evolution in Networking Standards

For decades, Ethernet stood as the universal protocol for wired computer networking. Its design was tailored to provide a simple, flexible, and scalable interconnect for both local and wide area networks. However, the rise of high-performance computing and expansive data centers paved the way for a burgeoning market opportunity, leading to the rapid adoption of Ethernet networking solutions.

In the midst of this evolution, a new standard, InfiniBand, emerged around the turn of the millennium. Crafted specifically to link servers, storage, and networking devices in high-performance computing environments, InfiniBand prioritized low latency, high performance, low power consumption, and reliability. This focus on optimizing for high-performance computing environments, which are the bastions of AI technologies, propelled its rapid acceptance. Notably, the ascendancy of InfiniBand was underscored by the remarkable rise in its usage, with the number of the world’s Top 100 supercomputers employing InfiniBand networking technology catapulting from 10 in 2005 to a staggering 61 at present.

The primary purveyor of InfiniBand-based networking equipment, Mellanox, founded by former Intel executives in 1999, has long held sway in this domain. Notably, in 2019 a fierce bidding war erupted, with industry heavyweights Nvidia, Intel, and Xilinx (subsequently acquired by AMD) vying to acquire Mellanox. Ultimately, Nvidia emerged victorious, clinching the acquisition with a substantial $6.9 billion offer. This well-timed acquisition proved to be a strategic masterstroke, catapulting Nvidia into a position of strength in the realm of InfiniBand networking technology, a move that received resounding validation with the rapid surge of AI in 2023.

The Riches of Acquisition

Beyond simply acquiring Mellanox’s InfiniBand expertise, Nvidia reaped a bountiful harvest from the acquisition. Mellanox’s prowess extended beyond the InfiniBand standard, encompassing the production of high-end Ethernet equipment, including leading adapters, Ethernet switches, and smart network interface (NIC) cards. Leveraging these technologies, Nvidia has crafted competitive networking solutions for adherents of Ethernet standards. The introduction of the Ethernet-based Spectrum-X platform stands as a prime example, delivering networking performance that propels it to be 1.6 times faster, as attested by the company. Notably, major players such as Dell, Hewlett Packard Enterprise Company, and Lenovo Group have committed to integrating Spectrum-X into their servers, a move that promises to expedite AI workloads for their clientele.

In addition to InfiniBand and Spectrum-X technologies that typically interconnect entire GPU servers housing 8 Nvidia GPUs, the range of Nvidia’s networking solutions encompasses the NVLink direct GPU-to-GPU interconnect. This technology bears several advantages over the standard PCIe bus protocol employed in connecting GPUs, including direct memory access that eliminates the need for CPU involvement, along with unified memory that enables GPUs to share a common memory pool.

A Wisely Tended Garden of Growth

The burgeoning growth of Nvidia’s networking business stands as a testament to the astuteness of the Mellanox acquisition. The division’s revenues have surged, with its most recent Q3 FY2024 quarter exhibiting a staggering nearly threefold increase, propelling the business to surpass the $10 billion annual run rate.

The collective data center networking market is poised for robust expansion, with an anticipated compound annual growth rate (CAGR) ranging between 11-13%. However, within this domain, InfiniBand is poised for even more exceptional growth, with projections hinting at a CAGR of approximately 40%, elevating it from its current multi-billion-dollar scale. This burgeoning avenue promises to furnish Nvidia with another rapidly ascending revenue stream, a facet that I believe the market has yet to fully appreciate.

By deftly fusing Nvidia’s cutting-edge GPUs with its advanced networking solutions in the HGX supercomputing platform, the company has etched out an exemplary sales motion, bolstered further by the launch of the Grace CPU product line. This combination effectively serves as the reference architecture for AI workloads, portending a rapid evolution of this burgeoning market in the years to come.

A Pie That Defies Appetite

The realm of accelerated computing solutions sculpted by the voracious appetite for AI exhibits signs of unrelenting expansion in the foreseeable future. Nvidia’s arsenal of data center products has been precisely calibrated to cater to this burgeoning market, and the company is already reaping the rewards. However, this may only represent the tip of the iceberg.

At a recent AI event hosted by AMD, Lisa Su shared an illuminating insight. The data center accelerator total addressable market (TAM) is anticipated to scale by more than 70% annually over the next four years, culminating in a towering $400 billion TAM in 2027. This profound spike in demand represents a monumental opportunity for investors, one that current valuations in the sector inadequately reflect, in my opinion.

As Nvidia’s product portfolio spans the lion’s share of the data center accelerator market, destined to command its product landscape in the ensuing years, a once-in-a-generation opportunity beckons. Moreover, the prevailing valuations in the sector appear woefully unprepared to grapple with this seismic shift, a disparity that warrants detailed examination in the subsequent discussion.

Accelerated Computing Demand Shows No Signs of Slowing Down

As investors navigate through the stormy waters of the financial markets, they are constantly on the lookout for the next big opportunity. Enter Nvidia, a company that has been making waves in the world of accelerated computing, with its products at the heart of data center operations. Analysts and industry observers are bullish on the trajectory of Nvidia’s growth, citing soaring demand and compelling financial indicators as key drivers for a potential surge in the company’s stock price. Let’s take a closer look at the factors underpinning this narrative and evaluate the investment landscape for Nvidia.

Steady Growth Projections and Market Sentiment

Analyzing the growth trajectory of Nvidia, it becomes apparent that the company stands at the forefront of a rapidly expanding market for accelerated computing in data centers. Recent estimates indicate analysts’ expectations for a 54% year-over-year increase in revenues for 2025, followed by 20% in 2026 and 11% in 2027. However, some market observers argue that these projections could be conservative, given the prevailing trends in the industry. The increasing emphasis on artificial intelligence (AI) and cloud infrastructure by technology giants such as Microsoft, Alphabet, and Amazon further bolsters the case for sustained growth in accelerated computing.

“We expect capital expenditures to increase sequentially on a dollar basis, driven by investments in our cloud and AI infrastructure.” – Amy Hood, Microsoft EVP & CEO, Microsoft Q1 FY2024 earnings call.

“We expect fulfillment and transportation CapEx to be down year-over-year, partially offset by increased infrastructure CapEx to support growth of our AWS business, including additional investments related to generative AI and large language model efforts.” – Brian Olsavsky, Amazon SVP &CFO, Amazon Q3 2023 earnings call.

“We continue to invest meaningfully in the technical infrastructure needed to support the opportunities we see in AI across Alphabet and expect elevated levels of investment, increasing in the fourth quarter of 2023 and continuing to grow in 2024.” – Ruth Porat, Alphabet CFO, Alphabet Q3 2023 earnings call.

The repeated mentions of AI in the context of capital expenditures indicate a palpable shift toward accelerated computing, which is poised to garner a significant share of IT budgets in the coming years. This trend underscores the burgeoning demand for Nvidia’s products as organizations pivot towards AI-centric investment strategies, thereby fueling accelerated computing’s ascendancy in the market.

Forecasting Market Growth and Long-term Prospects

With industry forecasts projecting annualized data center GPU market growth rates of 28-35%, there is a palpable sense of optimism surrounding the potential for accelerated computing. While some independent research reports may suggest relatively slower growth rates compared to AMD’s projections, the dynamic nature of the market renders these assessments fluid and subject to rapid change. As the market expands from a lower base, the prospects for accelerated computing in the immediate future remain robust, auguring well for Nvidia and its competitors.

Moreover, the versatility of GPUs in handling diverse computational tasks apart from AI and machine learning workloads underscores their potential to reshape data center environments. As CPUs yield ground to GPUs in terms of cost-effective acceleration of tasks, Nvidia’s position as a frontrunner in the accelerated computing landscape becomes increasingly pronounced, with its dominance poised to extend beyond the realms of AI and ML.

Evolution of Margin Profile and Valuation Outlook

A compelling aspect of Nvidia’s growth narrative is the rapid transformation of its margin profile. Marked by a significant upsurge in the share of top-tier GPUs and networking solutions in its product portfolio, Nvidia’s margins have witnessed a pronounced rebound, reaching impressive levels in its most recent financial quarters. With gross margin touching 70% on a trailing twelve-month basis and net margin soaring above 40%, Nvidia’s financial indicators paint a compelling picture of its profitability and resilience in a competitive landscape.

As we evaluate the outlook for Nvidia’s valuation, it becomes evident that the company is primed for an attractive risk-reward proposition. Fueled by robust demand for accelerated computing and bolstered by expanding margins, Nvidia’s shares present a compelling case for investors seeking exposure to a dynamic and high-growth industry.

In conclusion, the continued surge in demand for accelerated computing in data centers, coupled with Nvidia’s robust financial performance, sets the stage for a compelling investment opportunity. As organizations double down on AI and cloud infrastructure, Nvidia stands poised to reap the rewards of its leading position in the accelerated computing landscape, making it a noteworthy candidate for investors eyeing the next big wave in technology.

The Future of Nvidia: A Rollercoaster Ride or a Smooth Sail?

As the year 2024 unfolds, investors are eagerly anticipating the trajectory of Nvidia, one of the leading forces in the technology and semiconductor sector. Amidst widespread speculation and chatter in the market, all eyes are focused on the estimated $50 billion total market size, which has been the talk of the town.

Scenario Analysis: Projecting Nvidia’s Future

Venturing into the depths of scenario analysis, various growth rates and market share trajectories for the data center accelerator market are being fervently discussed. A 10% annual growth rate has been assumed for Nvidia’s Gaming, Professional Visualization, and Auto segment revenues across all three scenarios, ensuring comprehensive coverage.

From net margin estimates to the calculation of EPS and the determination of an appropriate multiple for share price estimation, every aspect has been meticulously examined to capture the essence of Nvidia’s potential valuation scenarios.

Weighting the Scenarios: Base Case, Pessimistic, and Optimistic

Delving into the base case scenario, assumptions are made regarding the doubling of the data center accelerator market size from 2023 to 2024 and its implications for Nvidia’s revenue. With piercing scrutiny, the scenario forecasts a robust 53% net margin in 2024, paving the way for a compelling $1,173 share price by the year’s end. However, the accompanying uncertainty looms large, casting a shadow on the otherwise promising outlook.

Embarking on the pessimistic scenario, a more cautious stance is adopted, considering conservative growth dynamics and a potentially rapid adoption of competing products. This path leads to a 30% increase in share price for 2024, but with an aura of diminishing margins and limited upside, indicating the challenges on the horizon.

Venturing into the optimistic scenario, an air of buoyancy prevails as the data center accelerator market’s exponential growth augurs well for Nvidia. With peak margins in 2024 and a more gradual descent, the shares are likely to be priced more aggressively, potentially culminating in a share price close to the $2,000 mark. While optimism abounds, a note of caution resonates, underscoring the need to navigate potential headwinds.

Weighing the Options: A Calculated Gamble

In the global arena of investment, Nvidia’s shares present a tantalizing risk-reward proposition at current levels, beckoning investors to ponder their next move. With probabilities assigned to the scenarios, the base case reigns supreme with a 60% likelihood, trailed by the optimistic scenario at 20-25% and the pessimistic one at 15-20%. However, amidst the labyrinth of probabilities, the tantalizing specter of unforeseen scenarios lingers, urging caution and strategic forethought.

Amidst this cornucopia of forecasts and assumptions, the future of Nvidia stands poised at a crucial juncture, teetering between the thrill of a rollercoaster ride and the stability of a smooth sail. As 2024 unfolds, the narrative of Nvidia’s trajectory is shrouded in intrigue, inviting investors to brace themselves for a compelling journey amidst the ever-shifting winds of the market.

The Ebb and Flow of Accelerated Computing in an Ever-Changing Market

The accelerated computing segment is undergoing a dramatic transformation, as demand surges and competition intensifies. The ongoing battle for market dominance raises concerns for both investors and the industry’s leading companies.

The Shifting Landscape

With unprecedented technological advancements and the proliferation of AI and ML-based solutions, companies like Nvidia are experiencing a meteoric rise in demand for their accelerated computing hardware. However, this surge in demand is accompanied by a fierce competitive landscape, challenging the dominance of established players.

Navigating Risk Factors

Amidst the competitive forces, Nvidia faces a myriad of risks, foremost among them being the potential impact of a Chinese military offensive against Taiwan. As an integral component of Nvidia’s supply chain, Taiwan Semiconductor (TSMC) is a linchpin, with crucial implications for the company’s production capabilities. Moreover, geopolitical tensions and export restrictions present additional hurdles for Nvidia’s operations in China.

The complexities of international relations and trade dynamics further compound the uncertainties surrounding Nvidia’s future prospects. The fluid geopolitical landscape and its implications on semiconductor manufacturing highlight the vulnerability of companies operating in this sector to external shocks and disruptive events.

Charting the Course

In spite of the prevailing risks and headwinds, Nvidia’s 2023 performance has been robust, fueled by the escalating demand for accelerated computing solutions. The company is poised to capitalize on the sustained momentum in AI and ML technologies, underpinning its potential for continued growth.

Although concerns regarding increased competition and geopolitical complexities loom large, Nvidia’s valuation suggests an opportune entry point for investors, with a compelling risk-reward proposition. As the market navigates the ebbs and flows of accelerated computing, astute monitoring and calculated judgments remain imperative for stakeholders.

Dear Reader, your insights and perspectives on these critical issues are invaluable. Feel free to engage in the discourse and share your thoughts below.