“`html

Image source: The Motley Fool.

Nvidia (NASDAQ: NVDA)

Q3 2025 Earnings Call

Nov 20, 2024, 5:00 p.m. ET

Nvidia Achieves Record Financial Growth in Q3 2025 Earnings Call

Key Highlights from the Earnings Call

- Prepared Remarks

- Questions and Answers

- Call Participants

Opening Remarks

Operator

Good afternoon. My name is Jay, and I’ll be your conference operator today. I’d like to welcome you to NVIDIA’s third-quarter earnings call. All lines have been muted to prevent background noise.

After the speakers’ comments, we will have a question-and-answer session. [Operator instructions] Thank you. Stewart Stecker, you may begin.

Stewart Stecker — Senior Director, Investor Relations

Thank you. Good afternoon, everyone, and welcome to NVIDIA’s Q3 fiscal 2025 earnings call. Today, I’m joined by Jensen Huang, our president and CEO, and Colette Kress, our executive vice president and CFO. This call is being webcast live on NVIDIA’s Investor Relations website and will be available for replay until we discuss our financial results for Q4 fiscal 2025. Please note, this call contains forward-looking statements based on current expectations.

Investment Considerations for Nvidia

Before making any investment decisions regarding Nvidia, please keep the following in mind:

The Motley Fool Stock Advisor analyst team recently pointed out what they believe to be the 10 best stocks to buy now, and Nvidia is not included. Those stocks have great potential for high returns in the upcoming years.

Looking back, when Nvidia was recommended on April 15, 2005, an investment of $1,000 would have grown to $900,893!*

Stock Advisor offers investors a reliable strategy, which includes insights on building a portfolio, regular updates, and new stock recommendations each month. This service has more than quadrupled the S&P 500’s returns since 2002*.

See the 10 stocks »

*Stock Advisor returns as of November 18, 2024

There are significant risks and uncertainties regarding future performance. For discussions about factors impacting our financial results, please check our earnings release, the latest Forms 10-K and 10-Q, and reports that we may file on Form 8-K with the SEC. All statements made are as of today, November 20, 2024, based on the information we have at hand. We do not intend to update these statements unless required by law.

Strong Q3 Results

Colette M. Kress — Chief Financial Officer, Executive Vice President

Thank you, Stewart. Q3 was another record quarter for us. We achieved remarkable growth with revenue hitting $35.1 billion, a 17% increase from the previous quarter and a 94% rise year over year, well exceeding our forecast of $32.5 billion.

Every market platform showed strong sequential and annual growth, driven by the increasing adoption of NVIDIA’s accelerated computing and AI technologies. Notably, our data center revenue reached $30.8 billion, an increase of 17% sequentially and 112% year-over-year.

The demand for the NVIDIA Hopper platform remains exceptional, with H200 sales surging to the double-digit billions, marking the fastest product ramp in our history. The H200 offers up to 2x faster inference performance and 50% better total cost of ownership.

Cloud service providers accounted for nearly half of our data center sales, with revenues more than doubling year on year. They deployed NVIDIA H200 infrastructure and high-speed networking solutions to manage increasing demand for AI training and inference workloads.

Currently, NVIDIA H200-powered cloud instances are available from AWS, CoreWeave, and Microsoft Azure, with Google Cloud and Oracle Cloud Infrastructure set to follow soon. Growth in regional cloud revenue has been rapid, particularly in North America, India, and the Asia Pacific regions, as these areas adopt NVIDIA Cloud instances and build sovereign cloud capabilities.

Revenue from consumer internet applications more than doubled year-on-year, as companies expanded their NVIDIA Hopper infrastructure for training AI models and generating AI content. Our platforms, Ampere and Hopper, played a key role in boosting inference revenue for our customers.

NVIDIA’s position as the largest inference platform globally strengthens our market presence. Our extensive installed base and robust software ecosystem enable developers to optimize their applications for NVIDIA, leading to enhanced performance and cost efficiency. Notably, advancements in our software algorithms have increased Hopper inference throughput fivefold over the last year.

The upcoming NVIDIA NIM release is projected to further enhance Hopper inference performance by an additional 2.4x.

Continuous performance improvements define NVIDIA, yielding economic returns for our users. Blackwell is now fully in production after a successful rollout. We delivered 13,000 GPU samples in Q3, including one of the first Blackwell DGX engineering samples to OpenAI.

Blackwell is a comprehensive AI data center system offering customizable configurations to address a rapidly growing AI market, serving various needs from training to inference. Our major partners are receiving Blackwell systems as they prepare their data centers.

The demand for Blackwell is astounding, and we are working to ramp up supply to meet customer needs. Recently, Oracle announced the world’s first Zettascale AI cloud computing clusters, capable of scaling to over 131,000 Blackwell GPUs for advanced AI model training and deployment. Additionally, Microsoft has become the first cloud service provider to offer private previews of Blackwell-based cloud instances powered by NVIDIA GB200 and Quantum InfiniBand.

“`

NVIDIA’s Blackwell Architecture Revolutionizes AI Performance

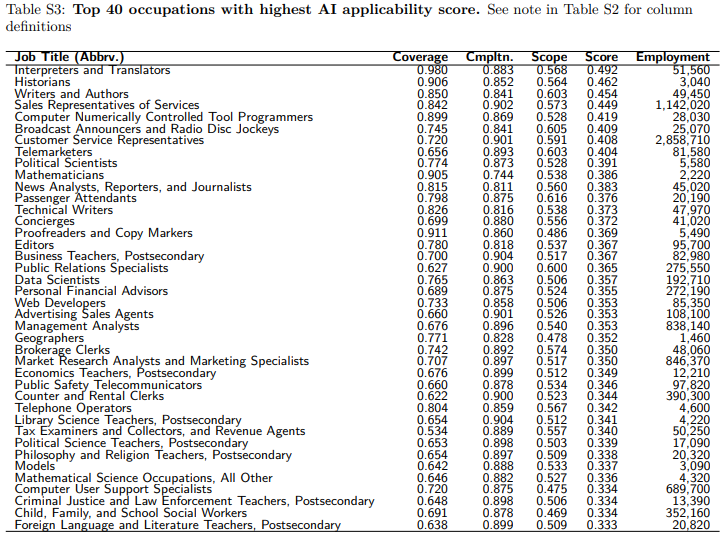

NVIDIA Blackwell has made waves by achieving a remarkable 2.2x performance improvement over the Hopper architecture in the latest MLPerf training benchmarks.

Cost Efficiency Takes Center Stage

The data reveals a significant reduction in computing costs. To run the GPT-3 benchmark, only 64 Blackwell GPUs are necessary; this contrasts sharply with the 256 H100 GPUs, marking a 4x cost reduction. The NVIDIA Blackwell architecture, coupled with NVLink Switch, delivers up to 30x faster inference performance, enhancing efficiency for AI applications like OpenAI’s o1 model. New technological advances often spark a surge of innovative start-ups.

Emerging AI Companies Thrive

Numerous AI-native companies are successfully providing AI services in various sectors. High-profile players such as Google, Meta, Microsoft, and OpenAI dominate the landscape, but companies like Anthropic, Perplexity, Mistral, Adobe Firefly, Runway, Midjourney, Light Tricks, Harvey, Podium, Purser, and the Bridge are also making significant strides, bolstered by thousands of budding start-ups. The next significant waves in AI focus on Enterprise AI and industrial applications.

NVIDIA AI Enterprise Fuels Innovation

NVIDIA AI Enterprise, featuring tools like NVIDIA NeMo and NIMs microservices, serves as an operating platform for agent-based AI solutions. Industry leaders such as Cadence, Cloudera, Cohesity, NetApp, Salesforce, SAP, and ServiceNow are collaborating with NVIDIA to accelerate the development of these transformative applications. Consulting giants like Accenture and Deloitte are also integrating NVIDIA AI into their enterprise solutions.

Accenture has created a dedicated business unit with 30,000 professionals trained in NVIDIA AI technologies to support this global expansion. Furthermore, with over 707,000 employees, Accenture is implementing NVIDIA-powered agentic AI solutions within its operations, including one application that reduces manual steps in marketing campaigns by 25% to 35%. Nearly 1,000 companies are utilizing NVIDIA NIM, as underscored by a significant increase in revenue from NVIDIA AI Enterprise. Full-year revenue for NVIDIA AI Enterprise is expected to rise over 2x compared to the previous year, with a growing pipeline of opportunities.

Industrial AI Gains Momentum

NVIDIA’s annual software, service, and support revenue is projected to reach $1.5 billion, potentially exceeding $2 billion by year-end. The sectors of industrial AI and robotics are rapidly evolving, driven by advancements in physical AI and foundation models. Tools like NVIDIA NeMo enable enterprises to develop AI agents that interact with the real world effectively. NVIDIA has developed the Omniverse platform to allow developers to create, train, and manage industrial AI and robotics solutions.

Major manufacturers, including Foxconn, the largest electronics producer globally, are deploying NVIDIA Omniverse to enhance productivity and streamline operations. In a single facility in Mexico, Foxconn anticipates reducing its annual operational hours by over 30% using these advancements. From a regional perspective, NVIDIA’s data center revenue saw growth in China, primarily due to compliant Hopper product shipments, though previous levels prior to export restrictions remain unattained. The Chinese market is expected to remain highly competitive going forward, with ongoing compliance to export regulations while servicing clients.

Global AI Infrastructure Expansion

Leading cloud service providers (CSPs) in India are constructing “AI factories,” ramping up their NVIDIA GPU deployments nearly tenfold by year-end. Companies like Infosys, TFC, and Wipro are adopting NVIDIA AI Enterprise to train around half a million developers and consultants in building and managing AI agents on this platform. In Japan, SoftBank is creating the country’s most advanced AI supercomputer powered by NVIDIA Blackwell and Quantum InfiniBand, working alongside NVIDIA to create a distributed AI telecommunications network.

In the U.S., NVIDIA is collaborating with T-Mobile to launch similar initiatives. Major players in Japan, including Fujitsu, NEC, and NTT, are implementing NVIDIA AI Enterprise, supported by consulting firms like EY Strategy and Consulting to integrate this technology within various industries.

Networking Solutions Experience Growth

NVIDIA’s networking revenue rose by 20% annually, with consistent demand in sectors like InfiniBand and Ethernet switches, SmartNICs, and BlueField DPUs. Although there was a slight sequential decline in networking revenue, strong demand signals anticipated growth in Q4. CSPs and supercomputing facilities continue to invest in NVIDIA’s InfiniBand platform for their new H200 clusters.

NVIDIA Spectrum-X Ethernet for AI revenue surged over three times year on year, with multiple CSPs and internet companies preparing large-scale deployments. Traditional Ethernet solutions fall short for AI needs; however, Spectrum-X effectively utilizes technology previously exclusive to InfiniBand, enabling remarkable computing scalability.

By implementing Spectrum-X, xAI’s Colossus supercomputer, which boasts 100,000 Hopper units, achieved zero application latency degradation while maintaining 95% data throughput compared to just 60% with traditional Ethernet.

Gaming and AI PCs Drive Revenue Growth

In the gaming sector, NVIDIA reported revenue of $3.3 billion, reflecting a 14% increase sequentially and a 15% year-on-year growth. The third quarter proved successful for gaming, with notable revenue contributions from notebooks, consoles, and desktops.

Strong demand for RTX products during back-to-school season highlights consumer preference for GeForce RTX GPUs, vital for gaming and creative applications. With inventory levels healthy, preparations for holiday sales are underway. NVIDIA has started shipping new GeForce RTX AI PCs, equipped with up to 321 AI TOPS, from manufacturers like ASUS and MSI, integrating Microsoft’s anticipated Copilot+ features for Q4. These systems leverage RTX and AI capabilities for gaming, photo editing, image generation, and coding tasks.

This past quarter also marked the 25th anniversary of the GeForce 256, the world’s first GPU, which played a crucial role in transforming graphics, now driving the burgeoning AI revolution.

ProViz and Automotive Sectors Show Robust Growth

ProViz revenue reached $486 million, up 7% sequentially and 17% year on year, with NVIDIA RTX workstations solidifying their status as a top choice for professional graphics and engineering tasks. AI is emerging as a significant growth factor, especially in autonomous vehicle simulation and media content creation.

In the automotive industry, NVIDIA reported record revenue of $449 million, a 30% sequential growth and a remarkable 72% year-on-year increase, driven by the strong demand for self-driving technologies like NVIDIA Orin. Companies such as Global Cars are launching fully electric SUVs built on NVIDIA Orin and DriveOS.

Financial Metrics Reflect Ongoing Commitment

NVIDIA’s GAAP gross margin stands at 74.6%, with a non-GAAP gross margin of 75%. Although there was a sequential decline due to a shift in the product mix towards more complex, higher-cost systems within the data center, NVIDIA remains committed to innovation and operational efficiency.

Sequential increases in GAAP and non-GAAP operating expenses of 9% have resulted from rising compute and engineering development expenditures related to new product launches.

NVIDIA Reports Impressive Q3 Shareholder Returns Amid Growing Demand for Blackwell AI Infrastructure

In the third quarter, NVIDIA returned $11.2 billion to shareholders through share repurchases and cash dividends. Looking ahead, the company shared its expectations for Q4.

Revenue Projections Point to Continued Growth

NVIDIA anticipates total revenue to be around $37.5 billion, give or take 2%. This projection reflects strong demand for the Hopper architecture and the early rollout of Blackwell products. Although demand significantly surpasses supply, NVIDIA expects to exceed its earlier revenue forecasts for Blackwell, thanks to improved supply visibility. While gaming sales saw a boost in Q3, the company predicts a slight revenue decline in this sector in Q4 due to ongoing supply issues. GAAP gross margins are projected at 73%, with non-GAAP margins slightly higher at 73.5%, subject to a 50 basis point variance.

Blackwell: The Future of AI Infrastructure

Blackwell is a customizable AI infrastructure featuring seven types of NVIDIA-built chips alongside various networking options designed for both air and liquid-cooled data centers. NVIDIA’s primary focus is on meeting soaring demand and ensuring an optimal mix of configurations for customers. As production ramps up, gross margins for Blackwell are expected to stabilize in the low 70s, eventually reaching the mid-70s when fully operational.

Operating Expenses and Investments

NVIDIA projects GAAP operating expenses to be about $4.8 billion and non-GAAP expenses around $3.4 billion. As a significant player in data center-scale AI infrastructure, NVIDIA continues to invest in building data centers to enhance its hardware and software offerings, while also supporting product launches. Other income and expenses are expected to bring in approximately $400 million, excluding any gains or losses from nonaffiliated investments. The anticipated GAAP and non-GAAP tax rate is expected to be 16.5%, plus or minus 1%.

Upcoming Events for Investors

For investors and the financial community, NVIDIA has a couple of noteworthy upcoming events. The company will participate in the UBS Global Technology and AI Conference on December 3 in Scottsdale. Additionally, at CES in Las Vegas, CEO Jensen Huang will give a keynote speech on January 6, followed by a Q&A session with financial analysts on January 7. The earnings call for discussing Q4 fiscal 2025 results is slated for February 26, 2025.

Now, let’s open the floor for questions. Operator, please initiate the Q&A session.

Questions & Answers:

Operator

[Operator instructions] We’ll take a moment to assemble the Q&A lineup. As a reminder, please limit your queries to one per person. Our first question comes from C.J. Muse of Cantor Fitzgerald. Your line is open.

C.J. Muse — Analyst

Good afternoon. Thank you for this opportunity. I’d like to discuss the debate regarding the scaling of large language models. We’re still in early stages, but what insights can you offer on this? How are you assisting customers as they navigate these challenges? Also, considering that some clusters have yet to leverage Blackwell, is this driving an increased demand for Blackwell? Thank you.

Jensen Huang — President and Chief Executive Officer

Our foundation model pretraining scaling remains robust and ongoing. This trend is based on empirical laws rather than fixed physical principles. However, we’ve identified two additional scaling methods. One involves post-training scaling, including reinforcement learning with both human and AI feedback, alongside synthetic data for enhanced scaling. Recent developments like Strawberry and OpenAI’s o1, which focus on inference time scaling, show that increased processing time yields higher quality responses. This mirrors our own thinking process before formulating an answer. Thus, we now utilize three scaling methods, leading to heightened demand for our infrastructure.

Currently, the last generation of foundation models utilized around 100,000 Hoppers, while the next generation is starting with 100,000 Blackwells, indicating the industry’s trajectory in pretraining, post-training, and inference time scaling. Demand continues to grow from various sources.

Operator

Your next question comes from Toshiya Hari of Goldman Sachs. Your line is open.

Toshiya Hari — Analyst

Hi. Good afternoon. Thanks for taking my question. Jensen, considering the transition you executed earlier this year, there have been reports of heating issues. Can you provide insights on your execution timeline for the roadmap presented at GTC this year, including the upcoming Ultra release and the switch to Rubin in ’26? Some investors are uncertain, so clarity on this would be appreciated. Additionally, regarding supply constraints, can you clarify if this is due to multiple components or specifically to HBN? Are the supply constraints improving or worsening? Any insights would be helpful. Thank you.

Jensen Huang — President and Chief Executive Officer

Thank you. Regarding Blackwell production, we are currently scaling up operations significantly. In fact, as Colette mentioned earlier, we project to deliver more Blackwells this quarter than we initially anticipated. Our supply chain team is effectively collaborating with our suppliers to enhance Blackwell output, and we will continue to prioritize increasing supply through next year. It’s important to note that overall demand currently outstrips supply.

This scenario is expected given the current generative AI boom and the onset of a new age in foundation models capable of advanced reasoning and long-term thinking. Blackwell’s demand remains strong.

NVIDIA’s Blackwell Systems Set for Major Ramp-up Amid Growing AI Demand

The competition among major cloud service providers (CSPs) heats up as NVIDIA prepares its Blackwell systems for rapid deployment.

Numerous companies, including Dell, Oracle, Microsoft, and Google, are racing to unveil their latest systems. This competition pushes NVIDIA to refine the complex engineering needed for the integration of AI supercomputers into custom data centers globally. Despite existing challenges, NVIDIA has demonstrated significant progress, as indicated by the advancements surrounding the Blackwell systems.

NVIDIA has reported that supply and planned shipments for this quarter exceed earlier estimates. The Blackwell systems feature a variety of components, including seven custom chips, and are available in various configurations such as air-cooled and liquid-cooled options with different NVLink capacities. This level of integration into the world’s data centers is complex, yet NVIDIA has successfully managed similar projects in the past. Notably, the shipment last quarter stood at zero, while this quarter’s shipments could be valued in the billions.

A vast network of partners contributes to this ramp-up, including TSMC, Amphenol, Vertiv, SK Hynix, Micron, Amcor, KYEC, Foxconn, Quanta, Wiwynn, Dell, HP, Super Micro, and Lenovo. Given the sheer number of collaborators, NVIDIA’s logistics in scaling up production of Blackwell are commendable.

Movements within supply chains show they are in strong shape for an effective ramp of Blackwell systems. As NVIDIA moves forward with its operating roadmap, it anticipates continued performance improvements across its platforms.

Not only does this roadmap facilitate enhanced performance, but it also leads to reduced training and inference costs. Such reductions ultimately make AI technology more accessible. It’s crucial to note that data centers, regardless of size, operate under power constraints. As such, optimizing performance per watt could generate significant revenues for partners.

Many data centers have evolved from holding tens of megawatts of power to housing hundreds of megawatts, with future projects aiming for gigawatt capacities. Hence, the focus on performance efficiency translates directly into revenue generation for NVIDIA’s customers.

Operator

Your next question comes from Timothy Arcuri of UBS. Your line is open.

Timothy Arcuri — Analyst

Thanks. Can you discuss the trajectory for Blackwell’s ramp this year? In particular, you mentioned that Blackwell is expected to surpass several billions of dollars in shipments, and you previously suggested a crossover point with Hopper in April. Can you confirm if this remains accurate? Additionally, Colette, you noted that gross margins might fall to the low 70s during this ramp. Will this be the lowest point for margins, leading to subsequent improvements?

Jensen Huang — President and Chief Executive Officer

Colette, please start with the margin question.

Colette M. Kress — Chief Financial Officer, Executive Vice President

Sure, Tim. To address your questions, our gross margins could initially decline during the Blackwell ramp-up due to various custom configurations. However, we anticipate recovery as we optimize performance, aiming to achieve the low 70s in the first stage. In later quarters, we expect to see margins grow into the mid-70s.

Jensen Huang — President and Chief Executive Officer

Demand for Hopper is expected to persist through the next year, while we anticipate increased Blackwell shipments each quarter moving forward. This movement signifies the start of an essential shift in computing. The transition from traditional CPU coding to machine learning with GPUs marks profound change, as every business now recognizes the need for machine learning capabilities—integral for generative AI.

As we modernize $1 trillion worth of computing systems and data centers globally, we foresee the emergence of AI factories generating AI continuously, much like electricity. This transformation will set the stage for an entirely new industry, likely growing and evolving over several years.

Operator

Your next question comes from Vivek Arya of Bank of America Securities. Your line is open.

Vivek Arya — Analyst

Thanks for taking my question.

“`html

NVIDIA’s Leaders Discuss Future Growth and Market Dynamics

Analysts Question Gross Margin Goals and Hardware Trends

Colette, just to clarify, do you think it’s a fair assumption to think NVIDIA could recover to kind of mid-70s gross margin in the back half of calendar ’25? Just wanted to clarify that. And then Jensen, my main question, historically, when we have seen hardware deployment cycles, they have inevitably included some digestion along the way. When do you think we get to that phase? Or is it just too premature to discuss that because you’re just at the start of Blackwell? So, how many quarters of shipments do you think is required to satisfy this first wave? Can you continue to grow this into calendar ’26? Just how should we be prepared to see what we have seen historically, right, a period of digestion along the way of a long-term kind of secular hardware deployment?

Colette M. Kress — Chief Financial Officer, Executive Vice President

OK. Vivek, thank you for the question. Let me clarify your question regarding gross margins. Could we reach the mid-70s in the second half of next year? Yes, I think it is a reasonable assumption or goal for us. However, we’ll have to monitor how the ramp-up progresses.

But yes, it is definitely possible.

Jensen Huang — President and Chief Executive Officer

To address your question, Vivek, I believe that there will be no digestion until we modernize $1 trillion worth of data centers. The majority of the world’s data centers were built when applications were coded manually and ran on CPUs. This model is no longer efficient. Companies looking to build new data centers should focus on advancements in machine learning and generative AI.

Over the next few years, it’s reasonable to assume that the world’s data centers will be modernized. IT continues to grow by about 20% to 30% annually. By 2030, the total market for computing in data centers could reach several trillion dollars, and we need to adapt to this shift from coding to machine learning.

The second factor at play is generative AI. We are witnessing the emergence of capabilities unlike anything previously seen and a new market segment. A prime example is OpenAI, which adds value rather than replacing existing frameworks.

Just like the introduction of the iPhone, these innovations opened doors to new industries. Numerous AI-native companies are emerging, much like how the internet and cloud revolutions birthed new platforms. As we embrace these changes, organizations are creating AI factories to foster the production of artificial intelligence.

Operator

Your next question comes from the line of Stacy Rasgon from Bernstein Research. Your line is open.

Stacy Rasgon — Analyst

Hi, thanks for taking my questions. Colette, could you clarify what you mean by “low 70s” for gross margins? Would 73.5% count, or are you thinking of something different? As for my main question, you have projected a significant rise in total data center revenues next quarter, likely several billion dollars, right?

It seems Blackwell should exceed those figures, but you mentioned Hopper is still performing well. Is Hopper expected to see a decline in the next quarter? If so, why? Are supply constraints affecting it? There’s been strong demand in China, but is that expected to taper in Q4? Any details about the Blackwell transition compared to Hopper would be helpful. Thank you.

Colette M. Kress — Chief Financial Officer, Executive Vice President

First, regarding gross margins, when I mention “low,” I mean it could be around 71% to 72.5%. We may even exceed that. We’ll monitor yields and product improvements as we move through the year. As for Hopper, we’ve seen significant growth in orders for H200, and it remains our fastest-growing product. We will continue to sell Hopper in Q4, across various configurations, including potential sales in China. However, it’s also true that many customers are gearing up to adopt Blackwell.

The balance between Hopper and Blackwell in Q4 could lead to growth for both, but we’ll have to see how that unfolds.

Operator

Your next question comes from the line of Joseph Moore from Morgan Stanley. Your line is open.

Joseph Moore — Analyst

Thank you. Could you provide insights on the inference market? You’ve previously mentioned Strawberry and the implications of scaling projects. You also noted that older Hopper clusters could be repurposed for inference tasks. Do you anticipate inference to outpace training in the next year?

Jensen Huang — President and Chief Executive Officer

We have high hopes for the inference market. Our vision is for companies to utilize inference extensively across departments, from marketing to supply chain management. The dream is that every business engages in inference continually, leading to a flourishing market for AI-native startups. These startups produce tokens and deliver AI across various platforms, enhancing user experiences in applications like Outlook or Excel.

Every interaction, such as opening a PDF document, generates quantifiable data. One of the tools I’m excited about is NotebookLM from Google, which I find incredibly useful.

“““html

Insights into NVIDIA’s Growth and Future Directions

As the AI landscape evolves, NVIDIA is redefining its strategies and enhancing its technologies to stay at the forefront. Recent discussions highlight key aspects of their advancements in AI and networking.

The Rise of Physical AI

NVIDIA is at the helm of a transformative shift in artificial intelligence, known as physical AI. Unlike large language models that primarily understand human language, physical AI interprets the physical world. This new genre has the potential to predict outcomes, making it valuable for industries utilizing robotics and AI. The establishment of Omniverse plays a crucial role in this development, offering a platform for creating and training AI systems using synthetic data and physics feedback.

Challenges in AI Inference

Despite exciting advancements, inference presents significant challenges. High accuracy, low latency, and high throughput are crucial for efficient AI performance and can be difficult to achieve simultaneously. As the complexity of applications increases, so does the need for advanced capabilities in NVIDIA’s architecture. This need for context-based understanding drives the growth of both model size and context length.

NVIDIA’s Networking Business: A Closer Look

During the last quarter, NVIDIA experienced a 15% sequential decline in its networking business, as noted by Colette M. Kress, Chief Financial Officer. However, year-over-year growth remains strong, partly due to the integration of recent acquisitions like Mellanox. NVIDIA anticipates a recovery as it gears up for the Blackwell architecture, which promises enhanced networking capabilities.

Insights on Sovereign AI and Gaming Supply

Kress also discussed the increasing role of sovereign AI. Countries are developing their models tailored to local languages and cultures, creating significant growth opportunities, particularly in Europe and Asia Pacific. Regarding gaming, Kress acknowledged supply constraints but expects a rebound by the start of the new calendar year.

Future Growth and Market Dynamics

Analysts, including Aaron Rakers from Wells Fargo and Ben Reitzes from Melius Research, raised questions about future growth prospects and market conditions in light of potential political changes in the U.S. and ongoing challenges with China. While Kress and CEO Jensen Huang emphasized a cautious approach to guidance, they noted that supply chain improvements could lead to accelerated growth in the coming quarters.

This ongoing dialogue about NVIDIA’s innovations, challenges, and market strategies reflects the company’s commitment to leading in both AI and networking as it navigates a rapidly changing technological landscape.

“`

NVIDIA Embraces AI Revolution with Strong Growth and Future Outlook

Understanding the AI Ecosystem and NVIDIA’s Role

Stewart Stecker — Senior Director, Investor Relations

We are currently focused on our ongoing quarter while preparing to ship products related to Blackwell. Our suppliers around the world are working in harmony with us. Once we transition to the next quarter, we will provide more insight into our ramp-up for upcoming releases.

Leadership’s Commitment to Regulations and Customer Support

Jensen Huang — President and Chief Executive Officer

Regardless of the decisions made by the new administration, we will fully support their regulations while continuing to assist our customers and compete effectively in the market. Balancing compliance, customer support, and competition is our key focus.

An Analyst’s Inquiry into AI Computing Dynamics

Operator

Your final question comes from the line of Pierre Ferragu of New Street Research. Your line is open.

Pierre Ferragu — Analyst

Thank you for taking my question. Jensen, can you share how the overall AI ecosystem divides compute resources between pretraining, reinforcement learning, and inference? Specifically, how does this split among clients or prominent models today, and which areas are experiencing the most growth?

NVIDIA’s Strategic AI Insights

Jensen Huang — President and Chief Executive Officer

Currently, the majority of computing resources are focused on pretraining foundation models. As new technologies emerge, maximizing efficiency during pretraining and post-training becomes essential to minimize inference costs. While various tasks can be prioritized, real-time and contextual thinking remains necessary. All three components—pretraining, reinforcement learning, and inference—are expanding, reflecting the growing needs of the field. With multimodal foundation models in play, the amount of data, particularly petabytes of video, used for training is staggering. I foresee a continued rise in demand across all areas, necessitating increased computing power to enhance performance and lower costs.

Final Thoughts on NVIDIA’s Growth and AI Future

Operator

Thank you. I’d like to turn the call back over to Jensen Huang for closing remarks.

Jensen Huang — President and Chief Executive Officer

Our company’s remarkable growth stems from two key trends driving NVIDIA’s computing adoption. First, we are witnessing a major platform shift from traditional coding to machine learning, utilizing GPUs for processing neural networks. The existing $1 trillion base of data center infrastructure is evolving towards Software 2.0, which integrates AI capabilities.

Second, we find ourselves in the midst of an AI boom. Generative AI is establishing itself not just as a tool but as a budding industry, potentially leading to a multi-trillion-dollar market. Demand for products like Hopper and the full production of Blackwell are soaring due to a larger number of foundation model developers than last year. The rapid increase in pretraining and post-training capacities, along with the expansion of AI-native startups and successful inference services, is driving this demand. Moreover, new scaling principles, such as test time scaling introduced with ChatGPT, highlight the immense computing requirements involved.

AI is radically changing industries globally. Businesses are increasingly adopting AI to enhance productivity. As AI co-workers support employees in their tasks, investments in industrial robotics are surging. This growth is fueled by advances in physical AI, necessitating new training infrastructures to manage substantial data volumes.

Recognizing the significance of AI, nations are committed to developing their national AI infrastructures. The age of AI is here, expansive and multifaceted. NVIDIA’s integrated expertise positions us to tap into substantial opportunities across the AI and robotics landscape, serving everything from hyperscale cloud services to local AI solutions.

Thank you for joining us today; we look forward to our next discussion.

Operator

[Operator signoff]

Duration: 0 minutes

Call Participants:

Stewart Stecker — Senior Director, Investor Relations

Colette M. Kress — Chief Financial Officer, Executive Vice President

C.J. Muse — Analyst

Jensen Huang — President and Chief Executive Officer

Toshiya Hari — Analyst

Timothy Arcuri — Analyst

Colette Kress — Chief Financial Officer, Executive Vice President

Vivek Arya — Analyst

Stacy Rasgon — Analyst

Joseph Moore — Analyst

Aaron Rakers — Analyst

Atif Malik — Analyst

Ben Reitzes — Melius Research — Analyst

Pierre Ferragu — Analyst

More NVDA analysis

All earnings call transcripts

This article is a transcript of this conference call produced for The Motley Fool. While we strive for our Foolish Best, there may be errors, omissions, or inaccuracies in this transcript. As with all our articles, The Motley Fool does not assume any responsibility for your use of this content, and we strongly encourage you to do your own research, including listening to the call yourself and reading the company’s SEC filings. Please see our Terms and Conditions for additional details, including our Obligatory Capitalized Disclaimers of Liability.

The Motley Fool has positions in and recommends Nvidia. The Motley Fool has a disclosure policy.

The views and opinions expressed herein are the views and opinions of the author and do not necessarily reflect those of Nasdaq, Inc.